Face Mask Detection - Inference - Part 3

If you are just after the code, you can find it on Github.

Overview

So we have a trained model, now it is time to start making some inferences!

As mentioned previously, you will require a Google Coral USB Edge TPU accessory to make use of these models as they have been optimised for use with them. If you do not wish to employ the TPU with your trained model then you must not quantize them.

The inference code is packaged inside of a Docker image which affords many benefits as described in the start of this series.

Outputs from the inferences are currently available via a web page as you cant of course access the display from a Docker container. With the modular design, it would be straightforward to add additional code to transmit the inference data to the Cloud.

I have provided options for Docker images using the Raspberry Pi / ARMv7 and AMD64_86x architectures.

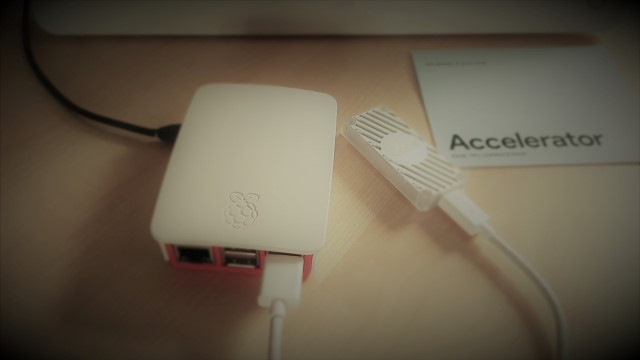

Raspberry Pi / ARMv7

This container packages all the requirements needed to perform inference using a Tensorflow Lite model trained on the Object Detection API and assumes you are making use of a Google Coral Edge TPU.

You will also require a webcam plugged into your host although you can change the source to a network based camera if you wish.

Prebuilt Container

If you wish to make use of a prebuilt Docker image then you can access it from Docker Hub using the following commanddocker pull cloudcommanderdotnet/rpitpuinference:latest

Running the Container

Make sure you have your webcam and Coral USB plugged in.

To run the container, enter the following commanddocker run -t -i --privileged -p 8000:5000 -v /dev/bus/usb:/dev/bus/usb cloudcommanderdotnet/rpitpuinference:latest

The container is started in privileged mode, all the RPi USB devices are mapped to the container and port 5000 is mapped to port 8000.

Now you just have to navigate to the IP address of your RPi in a web browser to see the object detection taking place.

Building your own image

I have provided the Dockerfile if you wish to make your own image that employs a custom model.

As building an image on the RPi is painfully slow, I suggest you make use of the cross-compiling functionality of buildx within Docker to build the image on your much faster x86 device instead.

docker buildx build --platform linux/arm/v7 -t YOURDOCKERHUB/image:latest --push .

AMD64_86x

This container packages all the requirements needed to perform inference using a Tensorflow Lite model trained on the Object Detection API and assumes you are making use of a Google Coral Edge TPU.

You will also require a webcam plugged into your host although you can change the source to a network based camera if you wish.

Prebuilt Container

If you wish to make use of a prebuilt Docker image then you can access it from Docker Hub using the following command docker pull cloudcommanderdotnet/amd64tpuinference:latest

Running the Container

Make sure you have your webcam and Coral USB plugged in.

To run the container, enter the following command docker run -it --privileged -p 8000:5000 -v /dev/bus/usb:/dev/bus/usb cloudcommanderdotnet/amd64tpuinference:latest

The container is started in privileged mode, all the RPi USB devices are mapped to the container and port 5000 is mapped to port 8000.

Now you just have to navigate to the IP address of your host in a web browser to see the object detection taking place.

Building your own image

I have provided the Dockerfile if you wish to make your own image that employs a custom model.

docker build -t YOURDOCKERHUB/image:latest --push .